Arbiter2

Arbiter2 is a cgroups based mechanic that is designed to prevent the misuse of head/login nodes, which are scare, shared resources.

Status and Limits

Arbiter2 applies different limits to a user's processes depending on the users status: normal, penalty1, penalty2, and penalty3.

These limits also have an applied threshold. Currently, the threshold for both CPU and Memory are 50% of the limits. For example, if the limit is 2 virtual cores and 2 GB of memory. The threshold is set to 1 virtual core and 1 GB of memory.

Once this threshold (i.e. a processes uses 1 virtual core and/or 1 GB of memory in normal status) has been reached or exceeded, a user's badness score will start to increase.

Once a user reaches badness score of 100 (if usage = threshold, this takes 10 minutes), they will be placed into penalty1 and receive an email notification.

Upon first log in, the user is in the normal status. These normal limits apply to all the user's processes on the head node.

Penalties and Throttling

Penalty1: Upon the first violation of resources quotas, the user will be placed into penalty1 status. Processes will NOT BE THROTTLED. This is only a warning.

Penalty2: If a user violates the quota's again within 3 hours of their previous violation, they will enter penalty2 status. Processes will be throttled to 60% of original CPU and memory for 1 hour.

Penalty3: If a user violates the quotas for a third time within 3 hours of their previous violation, they will enter penalty3 status. Processes will be throttled to 20% of original CPU and memory for 2 hours.

| Resource | Normal | Penalty1 | Penalty2 | Penalty3 |

|---|---|---|---|---|

| CPU (virtual cores) | 2 | 2.0 | 1.2 | 0.4 |

| Memory (GB) | 2 | 2.0 | 1.2 | 0.4 |

| Duration (minutes) | n/a | 30 | 60 | 120 |

While a user is in any penalty status, their processes are throttled if they consume more CPU time than a penalty limit. However, if a user's process exceeds a penalty memory limit, the processes (PIDs) will be terminated by cgroups.

Email Notification

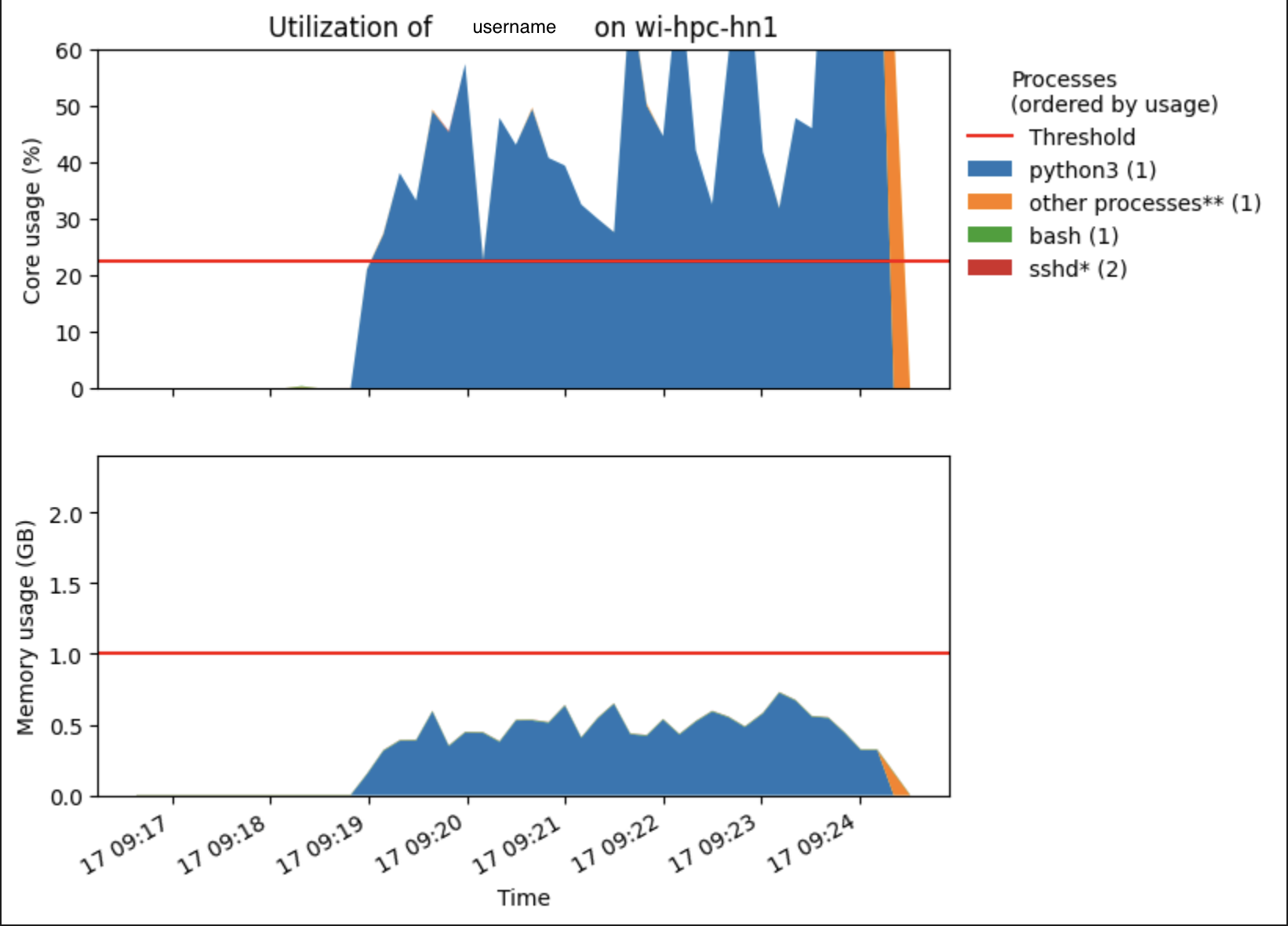

A user receives and email notification upon each violation. Below is an example email:

Violation of usage policy

A violation of the usage policy by username (firstName lastName) on wi-hpc-hn1 was automatically detected starting at HH:MM on MM/DD.

This may indicate that you are running computationally-intensive work on the interactive node (when it should be run on compute nodes instead).

You now have the status penalty1 because your usage has exceeded the thresholds for appropriate usage on the node. Your CPU usage is now limited to 80% of your original limit (0.8 cores) for the next 30 minutes. In addition, your memory limit is 80% of your original limit (0.8 GB) for the same period of time.

These limits will apply on wi-hpc-hn1.

High-impact processes

Usage values are recent averages. Instantaneous usage metrics may differ. The processes listed are probable suspects, but there may be some variation in the processes responsible for your impact on the node. Memory usage is expressed in GB and CPU usage is relative to one core (and may exceed 100% as a result).

Process Average core usage (%) Average memory usage (GB)

node (6) 0.12 1.11

other processes** (1) 0.05 0.00

python (2) 0.00 0.56

bash (5) 0.00 0.01

tmux: server (1) 0.00 0.00

sh (1) 0.00 0.00

Recent system usage

This process is generally permitted on interactive nodes and is only counted against you when considering memory usage (regardless of the process, too much memory usage is still considered bad; it cannot be throttled like CPU). The process is included in this report to show usage holistically.

This accounts for the difference between the overall usage and the collected PID usage (which can be less accurate). This may be large if there are a lot of short-lived processes (such as compilers or quick commands) that account for a significant fraction of the total usage. These processes are whitelisted as defined above.

Required User Actions

When a user receives an alert email that the user is put into penalty status, the user should:

- Kill the process that uses to much resources on the shared head node listed in the email, and/or reduce the resources used by the process.

- Submit an interactive job or a schedule a job via sbatch. See Job Schedule Examples on how to do this.

- See Getting Help to learn more about how to correctly use the WI-HPC cluster.

Exempt Processes

Essential linux utilities such as rsync, cpu, scp, and SLURM commands are exempt. To obtain a comprehensive list, please see Getting Help.